IN Java, Memory Management means Garbage Collection and memory allocation. Memory allocation is very small process as compared to Garbage Collection. Indeed, a well playing Garbage collection makes everything easy for memory allocation. Only major issue before memory allocation is Weather sufficient Memory available?. And its Garbage Collector responsibility to ensure enough memory is available all the time, otherwise ready to a face biggest obstacle “OutOfMemory” in running application. Writing a efficient Garbage Collection algorithm is very tedious task. Thanks to JVM, that they come up with several algorithms, and by default apply the best one. In day to day programming one might never feel a need to understand those algorithms, unless you have passion for knowing whats going on under the hood. OR some day after years of programming you face “OutOfMemory” Exception, and then you check that there are plenty of options which can be used to avoid such situation, for example JVM comes with several algorithms, and you start googling which one to use. After so many years of working over Java/J2EE i really need a feel to understand those algorithms, JVM memory management, memory sizing, and defaults.

Recently I attended a session by Chris Bailey in IBM, got some insight of the Memory Management in Java.

After reading this complete post, one can would be able to efficiently manage the Memory and avoid any memory leak.

The Java Process –

We are taking here example of 32 bit architecture. Memory management is complicated in 32 bit architecture. IN 64 bit architecture, we have huge memory, OR we can assign large chunk of memory to Java, and it comes with a cost of performance.

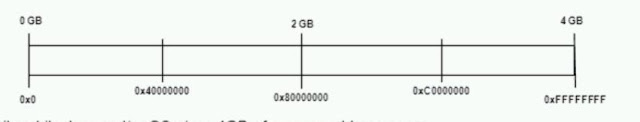

Java is a OS level process. There are some restriction imposed by the OS and architecture. 32 bit architecture means Processor has registers which are 32 bit wide. 32 it can hold values between 0×0 to 0xFFFFFFFF, which is ability to address upyto 4GB of memory. 32 bit process cant use more than 4GB memory for the process itself. ON 32 bit architecture and/or OS gives max. of 4GB of process address space.

Some memory is used by the OS and C-language runtime. It depends on which OS you are running. Below is a simplified diagram -

In windows it sits between nearly 2GB memory. IN LINUX it takes nearly 1GB memory. Area left over is termed as “User Space”, So on LINUX Java Runtime sits over 3 GB of memory, i.e. 3 GB of User Space. Some of the memory of the USer Space is going to be used by Java Virtual Machine.

JVM memory contains execution engine, JIT compiler, etc. which in itself require some memory.

In the User Space some memory is used by the JVM runtime, which is termed as Java Heap. Java Heap has min. and max. size. Minimum heap(Initial Size) size is set using -Xms and max. heap size is set using -Xmx. for example – Running following command on console java -Xmx1024M -Xms512M.

Apart from Java Heap there is a Native(System) Heap. Native Heap is User Space – Java Heap. Native Heap is required to run threads, used by JIT Compiler to store runtime data and executable code, Classes and ClassLoaders, JNI, java.nio.ByteBuffers, and Java runtime data.

There is automatic selection of Java Heap Sizes, and this is based one classification, i.e. weather machine is server class or client class.

A server class machine is defined to be one with:

2 or more physical processors AND 2 or more gigabytes of physical memory. ON machine that are not Server Class the default values are Initial heap size of 4 MB and Max. heap size of 64 MB. ON server class machine Initial heap Size is 1/64th of the physical machine and Max. heap size of 1/4th of the physical memory.

Typically on 32bit architecture, single large chunk can’t use more than 4 GB. (Some OS limit this to 2GB or 3GB). Based on facts we can say, we cant give more 2GB of memory to Java Heap on Windows. Since total we have 4GB, and some is required by OS and C-language runtime, some is required by JVM , and some is required by Native Heap. And ideally it should be around 1.5GB on Windows. On operating systems which impose a 2 GB limit on process size, use a heap size no larger than 1430 MB. It is generally recommended that the minimum and maximum heap sizes are set to the same value to maximize run time performance. ( We would discuss this later)

While setting the Maximum heap size, we must consider the memory requirements of theOS and C runtime, JVM, and native heap.

The memory size of the native heap, should be sufuciently large, specially when there are large number of threads involved.

A number of Java objects are underpinned by the OS level resources, therefore have associated native heap memory. When we create a java.land.Thread object, you need an execution thread( P thread on Unix). And that execution thread also has a Execution stack. and that takes memory. Java Thread takes 160 bytes, and along with that 160 k required on native heap.

Some platforms come with high performance threads, for eg. in following BEA JRockit JVM optimized for Intel architecture.

Source – http://software.intel.com/en-us/articles/intel-technology-provides-the-basis-for-next-generation-mrtes/

Some times you are allocating some memry on Java Heap, and allocating 100 times larger memory on Native Heap. When you create a Java Object, you may be allocating off Java Heap memory also. A small memory leak in Java Heap can lead to much bigger memory leak in Native Heap. You can get OutOfMemory in case you exhaust Java Heap, you can also get OutOfMemory Error when you exhaust Native Heap. For example, you might get - java.lang.OutOfMemoryError: unable to create new native thread.

In order to avoid any OutOfMemory error, and avoid any memory leak we need to manage memory efficiently. Memory Management is all about recognizing which objects are no longer needed, freeing the memory used by such objects, and making it available. In many modern Object oriented languages this process is automatic, and this automatic process is called Garbage Collection. There are several Algorithms which are used by Garbage Collector. Most efficient algorithm should be used by the collector. Applications consume memory, and way the memory is consumed, should be a criteria for choosing the garbage collector algorithm. A wrong choice may lead to OutOfMemory. Garbage Collector comes with default Algorithm, and which is suitable for most applications. At the end its applications responsibility to set all references to null for any object, if it is no longer required. This would be an indication to Garbage Collector that object is garbage, and it should be collected. An understanding of Garbage Collector algorithms would give you clear insight of how memory is managed, and also how application programmer can contribute to make memory management more efficient

Garbage Collection Algorithms -

Reference Counting - Most straight forward GC(Garbage Collection) algorithm is reference counting. Its most simple algorithm, where we count the number of references to each object. If count is zero for any object, consider the object to be garbage. Compiler has responsibility of increasing the count, after executing any assignment statement for any particular object. The major issue with this algorithm is that it can never claim unreachable cyclic references. for example in the following figure –

Objects – Obja, Objb, Objx, and Objy are unreachable objects. But their reference count is one, so they can never be collected, even though they are unreachable from stack, and they are no longer used. Reference Counting Algorithm would surely fail in collecting some no longer used objects like Obja, Objb, Objx, and Objy.

If we think a bit to find out solution to limitation of the Reference Counting algorithm, we know, any object which is not reachable from the root set(Stack) should be collected. One way to do this mark such objects in one go, and then collect them. Tracing Algorithmsstop the applications running(referred as Stop the World) start from the root set, trace every object and mark each reachable object as live. Mark Sweep is a tracing algorithm, which completes in two steps.

Step 1 – Mark all live objects(Reachable from rot set), Mark phase.

Step 2 – Any object not marked is garbage, and it is reclaimed, and memory climed by them is freed, Sweep phase.

We can definitely say in Mark Sweep Algorithm objects like Obja, Objb, Objx, and Objy would be collected by Garbage Collector. Drawback of Mark Sweep lies in second step, where we are visiting every object, finding out weather it is live or garbage depending upon criteria that it is marked or not. Second major drawback of Mark Sweep is that it leaves memory fragmented, as shown in below figure –

Above is the picture of Java Heap, after several run of Garbage Collector using MarkSweep Algorithm. the white spaces are free memory, and blue ones are currently used by objects. We can clearly see that memory is fragmented. A fragmented memory can also also lead to OutOfMemory, which in this case means there is memory available for not enough contiguous memory is available to hold some large object. Which can come when you create a new object, but enough contiguous memory is not available to hold the object. So, we can say there are two major drawbacks of Mark Sweep – 1. Every objects needs to be visited in Sweep phase.

2. Fragmented memory.

In order to resolve the drawbacks of the Mark Sweep, copying Algorithm was introduced, and to resolve the some drawbacks of copying, Mark Compact algorithm was used.

Copying Algorithm - Its another form of tracing algorithm, where heap is divided into two equal parts. Initial allocation of objects are done in one part only. So, only one part is containing active data, and other is unused.

Above we can see that all objects are allocated memory in the left half of the Java Heap, and since the left half is about to fill, we trace all the objects in the left half and copy all live objects to the right half of the Heap. So left half is only left with the garbage, and that can be collected easily. Check following figures –

Therefore, we can see we resolved both drawbacks of the Mark Sweep, we only needed to visit the garbage objects during sweep phase, and also we don’s have a fragmented memory. The major trouble with Copying Algorithm is that it requires double the space of Mark Sweep in order to give same performance. Somehow we need to incorporate the suggestions of copying Algorithm into Mark Sweep and come up with Mark CompactAlgorithm.

Mark Compact Algorithm(Mark-Sweep-Compact) - Its Mark Sweep combined with Copying, so increased complexity. Here every live object is marked in one phase. All live objects are compacted to one bottom of heap, and rest are garbage so collected. Compaction can be partial also, but if complete compaction is performed in one cycle of garbage collection, at the end heap would be similar to, result of copying garbage collector.

Above were some of the possible Garbage Collection algorithms. We can come up some more complicated and more efficient algorithms, and definitely current JVM’s are using much more complicated algorithm. One of the major challenge before any algorithm is time lost during “Stop The World”.

Before we discuss more garbage collection algorithms, we must know one general criteris in which objects can be divided – Old Objects and Young Objects.

One of the reality of Java Objects which can be utilized while programming garbage collection algorithm is that most of the objects die young, they are short lived. By Short lived we can say they can only survive only few cycles of garbage Collection. Any such object is called Young Objects. Some are long lived Objects, for example, in a Web Application, they stay for few hours. They are called Old Objects. Some studies say 98% of the objects die young. Copying algorithm works very well with such objects, as they don’t visit the dead objects. Dead objects would be one side of the heap, and would reclaimed in one swoop. On the other hand in case of old objects, copying algorithm is worse, as it would keep on copying the old objects over and over from one side to another.

Based on above classification and analysis, we can say we should have separate algorithms for young and old objects. And how we know weather object is young or old, best way is to keep young and old objects separate. And this was the basis of many Java Heap structure. A need to differentiate young and old objects gave birth to idea of Generational Collection.

A generational collector divides the heap into multiple generations.

HotSpot JVM(currently maintained by Oracle) divides the heap into Young and Old(Tenured) Generation. Objects are created in the young generation, and objects that meet some promotion criteria, such as having survived a certain number of collections, are then promoted to the next older generation, old(/Tenured) generation. A generational collector is free to use a different collection strategy for different generations and perform garbage collection on the generations separately. Please find below the architecture of the HotSpotJVM –

Source -

http://www.oracle.com/technetwork/java/javase/gc-tuning-6-140523.html Here we can see in figure Young Generation consists of Eden, Survivor and Virtual regions. Virtual is the address space which is virtually reserved but not allocated physical memory unless needed. Object is initially allocated to Eden(/creation), and copied to any survivor space by Copying GC, before being promoted to Tenure generation. Objects are copied between survivor spaces multiple times before being promoted to Tenure Generation. Apart from Young and old generation, some JVM’s like HotSpot include

Permanent Generation, which holds objects describing classes and methods or classes and methods themselves. We only need to consider Young and Old generation while deciding for Algorithms.

Oracle JRockit divides heap into two generations – Nursery and Tenured. Along with Nursery and Tenured region also. Before objects being promoted to Tenure Region they are kept in keep region. And objects need to survive one GC cycle(while in keep area), before being promoted to Old(/Tenure) region.

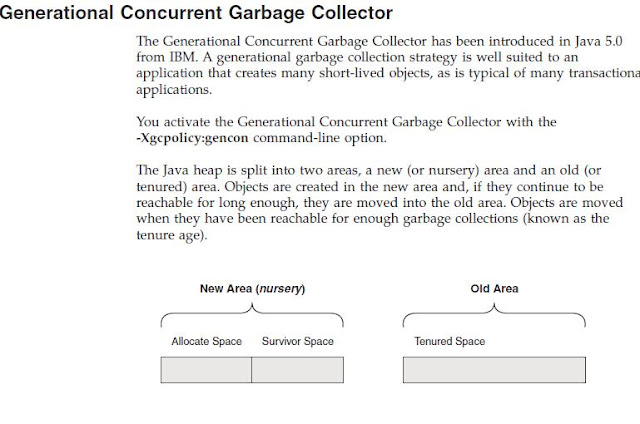

IBM JVM also comes into generation – Nursery and Tenured. Below is some extract from the “IBM Developer Kit and Runtime Environment, Java Technology Edition, Version 6″

IBM JVM comes with lot more optimizations and options, but we wont be discussing much about them, may be I would explain them in another post dedicated to IBM JVM.

IN the current post I am only going to discuss about Sun HotSpot JVM.

One of the advantages of generational collection is that it can make garbage collection pauses shorter by not collecting all generations at once. When the allocator is unable to fulfill an allocation request, it first triggers a minor collection, which only collects the young generation. A Minor Collection often leads to shorter pause, and claims significant amount of memory. If memory freed by minor collection is not enough, GC performs collection in higher generations which contains old objects. When older generations need to be collected there is a major collection that is often much slower because it involves all living objects. Old Generation collections are infrequent and take longer time to complete. Generally, all the JVM’s are using the copying algorithm for young generation, but increase performance to give better throughput, by default they are using copyingalgorithm with multiple threads.

Regarding garbage collection in old generation, there has been lot of thought and there are few options like – Mark-Sweep-Compact, Marking-Summary-Compact, and Concurrent Mark-Concurrent Sweep. We have already discussed Mark-Sweep-Compact algorithm. Rest two are also very similar to Mark-Sweep-Compact with slight changes.

Marking-Summary-Compact -

Marking Phase:

Several threads would run.

Heap would be divided into regions.

One thread per region would run.

Each region has data. Data would be updated by thread with information about the size & location of the live objects.

Summary Phase: (One thread)

Summary phase examines the density of each region & calculates a point after which, space recovered after compacting would be worth. Region left of that point is referred as “Dense Prefix”. Assume, our heap is in following stage(several cycles of GC has already run). The region left to the bold line is Dense prefix. If objects from the right side are moved to left side, we can get chunk of free space on right side. Summary phase would would store the new location(in left side) for live objects in right side.

Compaction phase:(Multiple threads)

Compaction phase uses the data prepared by the summary phase to identify, which regions are to be compacted, and which objects to move, and new location of the objects(in case they are to be moved). Multiple threads run independently, one in each region. And move objects to their new location.

One drawback with above discussed algorithm, is pause time’s. IN order to reduce pause time, we have an option of Concurrent Mark-Concurrent Sweep.

Concurrent Mark Sweep(CMS Collector) – This algorithm uses multiple threads during marking phase(indeed marking is three step process-Initial Mark, concurrent Mark, and Remark), and at the end ensures all live objects are marked. After marking is done, there is concurrent sweep, and all the garbage is collected. Important point to note is there is no compaction. Benefit of this algorithm over previous ones is that pauses time is very minimal, and drawback is that memory is left fragmented after GC runs, since there is no compaction.

J2SE 5.0 gave us several options of setting the GC – Serial, Parallel, Parallel Compacting, and CMS. Each applying a combination of two algorithms ( one for young generation and one for old). Below table summarizes the combination used by each option( we can set any one option) -

But with all kind of Mark Compact come with two limitations –

Run time is proportional to size of heap, i.e. O(Size_Of_Heap) There is always some pause time, we cant ignore them completely even in CMS also. Personally I have not observed but I believe on several 100 GigaBytes of hep, we might get pause time of 1 minute. And 1 minute is huge time.

Recently I watched a Presentation over ”Do you really get memory” by Jevgeni Kabanov, and understood in more detail about some really latest most efficient garbage collection algorithms. Below is the extract from his presentation which i watched over infoq -

http://www.infoq.com/presentations/Do-You-Really-Get-MemoryJDK6 Garbage First(G1) Garbage Collecor

Like Other HotSpot GC’s, G1 is generational means it has concept of old objects and new objects. Big difference between G1 and other Garbage collector is that now there is no physical difference between young generation and old generation.

Whole heap is divided into multiple regions.

Each region is of same size.

Few set of regions belong to young generation and few set to old generation.

SO, young generation is a non-contiguous set of regions, and similar is old generation. G1 is flexible enough to move objects from new to old and vice versa any time.

Key idea it separates whole heap into regions( possibly 100MB each). It tries to monitor how full of garbage each of region is, how quickly objects are getting allocated, and tries to predict how much memory would be needed. For example, it predicts in next 1 minute 90MB of memory would be required, and there is a region(of size 100 MB), in which mostly is garbage(>90MB), so it only need to perform GC over this particular region, and all memory requirements would be fulfilled. So run time of garbage collection is proportional to the heap size, but proportional to the amount of memory required.

Separate regions are called Cards, and it tracks dependencies between cards, i.e. which card is referring to which card. It maintains a small graph. On every assignment card graph is updated. So, we loose a bit of speed on assignment, which is a trade off, but truse me the difference in speed is very minimal.

In 2013 -

Oracle has announced that they would remove the PermGen from the Java 8 version of HotSpot JVM.